On October 23, 2025, the Whiting School of Engineering at Johns Hopkins University welcomed Aaron Roth, a renowned professor of computer and cognitive science at the University of Pennsylvania, to discuss the intricacies of human-AI collaboration. His talk, titled “Agreement and Alignment for Human-AI Collaboration,” examined the findings from three significant research papers that explore how artificial intelligence can assist humans in decision-making processes.

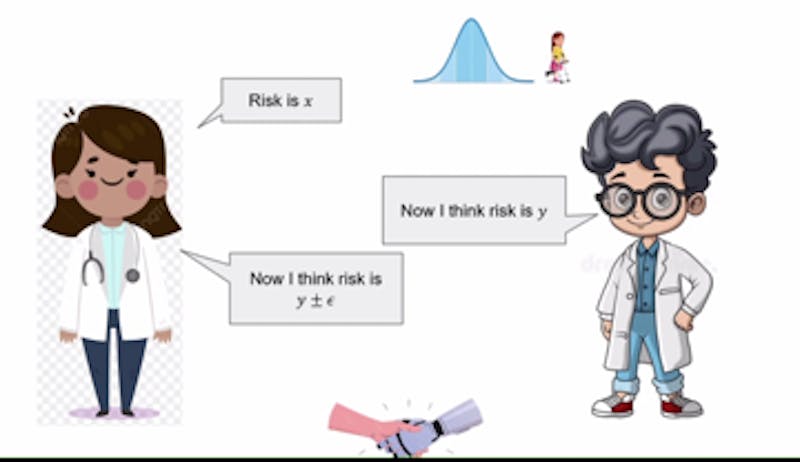

Roth’s presentation highlighted the potential of AI to enhance medical diagnostics. He illustrated this with an example involving AI systems that assist doctors in diagnosing patients. The AI analyzes various factors such as past diagnoses, blood types, and symptoms to make predictions. These predictions are then assessed by the physician, who can either agree or disagree based on their expertise and observations of the patient. In cases of disagreement, Roth emphasized that both the doctor and AI can engage in a dialogue, reiterating their viewpoints until they reach a consensus.

Understanding Agreement Through Common Prior

This process of reaching an agreement hinges on a concept Roth refers to as a common prior. Both the doctor and the AI start with the same foundational assumptions about the world, even if they possess different pieces of evidence. Roth described this dynamic as Perfect Bayesian Rationality, where each party understands the other’s knowledge but lacks specific details. Achieving this common prior can be challenging, particularly in complex scenarios where multidimensional topics, such as hospital diagnostic codes, are involved.

To facilitate better agreements, Roth introduced the idea of calibration. He likened calibration to testing a weather reporter’s accuracy in forecasting. “You can design tests that they would pass if they were forecasting true probabilities,” he explained. Calibration ensures that the communication between the doctor and AI is informed by previous claims, promoting a more accurate assessment of risks. For instance, if an AI estimates a 40% risk in a treatment and the doctor suggests it is 35%, the AI would adjust its next claim to fall between these two figures, thereby fostering a more collaborative environment.

Addressing Misalignment in Goals

Roth also addressed the potential misalignment of goals between AI systems and healthcare professionals. He pointed out that if an AI is developed by a pharmaceutical company, it may favor its products when recommending treatments. To mitigate this risk, he proposed that doctors consult multiple LLMs (Large Language Models). By comparing recommendations from various AI models, physicians can identify the best treatment options, ultimately encouraging market competition among AI providers to enhance alignment and reduce bias.

The discussion concluded with Roth emphasizing the importance of understanding real probabilities—the accurate probabilities that reflect the complexities of the real world. Although these precise probabilities are ideal, they are often not necessary. Instead, it is frequently sufficient for probabilities to remain unbiased under certain conditions. By leveraging data, doctors and AI can collaborate effectively to reach informed agreements on treatments and diagnoses.

Roth’s insights into human-AI collaboration underscore the significance of alignment and agreement in leveraging AI’s potential in various fields, particularly in healthcare. As AI continues to evolve, fostering effective communication and understanding between humans and machines will be essential for harnessing its capabilities responsibly.