Recent developments have revealed a complex relationship between artificial intelligence and health care, raising concerns about the quality of medical advice patients receive. According to Dr. Isaac Kohane, founding chair of the department of biomedical informatics at Harvard Medical School, nearly half of Americans now rely on AI chatbots for health-related inquiries, ranging from lifestyle modifications to second opinions on serious conditions like cancer. However, the implications of this reliance may not be fully understood by users.

AI companies often prioritize safety features, such as recognizing signs of self-harm and avoiding harmful recommendations. Yet, these safeguards do not guarantee that the advice given aligns with the best possible medical practices. For example, a patient diagnosed with a slowly growing brain tumor near the optic nerve may find that while many health systems advocate for surgical intervention, a specialized cancer center in the Midwest has developed a successful radiation treatment supported by 14 years of positive outcomes.

When this patient discusses options with their doctor, the hospital’s AI system may still recommend surgery, reflecting the prevalent standard of care rather than the most effective treatment available. Even if the patient seeks a referral for radiation therapy, their insurance company’s AI may also favor surgery, creating a cycle of compliance with established norms that may not serve the patient’s best interests.

Potential Risks in AI-Driven Health Care

As healthcare institutions increasingly adopt AI-driven systems, the risk of a standardized, AI-enforced approach to medical care grows. In a health care system valued at $5 trillion, the financial pressures to utilize AI for clinical decision-making can overshadow patient welfare. This shift may lead to errors of commission, such as unnecessary testing, and errors of omission, where preventive measures are overlooked in favor of more expensive treatments.

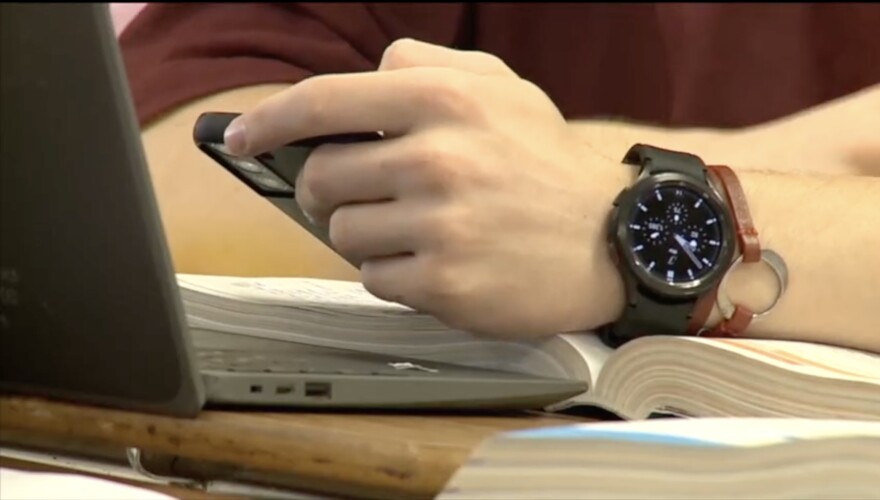

To navigate this evolving landscape, patients can take proactive steps to ensure the AI advice they receive prioritizes their health. First, becoming a savvy AI patient involves utilizing the unique advantages of AI, such as its capacity for patience and diverse perspectives. Patients should consider asking the same question from different viewpoints, such as, “What would you recommend if you were a surgeon?” followed by a similar inquiry framed from a physical therapist’s perspective.

Research from Harvard Medical School indicates that popular chatbots like Claude, ChatGPT, and Gemini often provide differing recommendations on the same medical cases. While multiple subscriptions to these AI tools may incur costs, they can still be less expensive than traditional co-pays. Patients are encouraged to bring AI-generated advice to their healthcare providers, fostering a collaborative discussion about potential treatment options.

Empowering Patients Through Data Ownership

Another crucial aspect of navigating AI health advice is data ownership. The 21st Century Cures Act guarantees patients access to their medical records, many of which can be obtained through hospital patient portals. If a hospital connects to platforms like Apple Health, patients can download files that chatbots can analyze. Gathering and organizing such data may require effort, but as AI technology advances, it will increasingly assist patients in making sense of their records.

However, patients should remain cautious, as not all chatbot companies guarantee that they will not retain or learn from user data. This raises significant questions about privacy and control over personal health information. As a response to the growing influence of AI in healthcare, there is a pressing need for policy development. Legislators should approach this carefully to avoid entrenching existing market leaders and stifling the emergence of patient-centered alternatives.

The implementation of health privacy laws, such as HIPAA, often favors commercial interests, leaving patients and independent researchers struggling to access the infrastructure needed for their needs. Future legislation should focus on promoting transparency rather than dictating specific medical practices. This includes requiring clear labeling regarding the data used to train AI systems and the influences shaping their clinical reasoning.

Understanding the origins and influences behind AI health tools is vital for fostering a healthcare landscape that serves patients. By demanding transparency, patients can make informed decisions about their health care, ensuring that AI serves their interests rather than those of powerful financial stakeholders.

As AI continues to evolve, it presents both opportunities and challenges in healthcare. Patients are encouraged to treat their health data as valuable, scrutinize AI recommendations critically, and advocate for transparency from developers. The alternative is to let a multi-trillion-dollar industry dictate healthcare decisions, potentially prioritizing profit over patient welfare.